logreg

Transcript of logreg

-

8/13/2019 logreg

1/26

Binary Choice Models1. Binary Dependent Variables

2. Probit and Logit Regression

3. Maximum Likelihood estimation

4. Estimation Binary Models in Eviews

5. Measures of Goodness of Fit

6. Other Limited Dependent Variable Models

7. Exercise

-

8/13/2019 logreg

2/26

1 Binary Dependent Variables

The variable of interest Y is binary. The two possible outcomes are

labeled as 0 and 1. We want to model Y as a function of explanatory

variables X= (X1, . . . , X p).

Example: Y=employed (1) or unemployed (0); X=educational level,

age, marital status, ...

Example: Y=expansion (1) or recession (0); X=unemployment level,

inflation, ...

-

8/13/2019 logreg

3/26

Can we still use linear regression?

Then

E[Y|X] =0+ 1X1+ . . . pXp

and the OLS fitted values are given by

Y = 0+ 1X1+ . . .pXp.

! Problem: the left hand side of the above equations takes values

between 0 and 1, while the right hand side may take any value on the

real line.

Note that

E[Y|X] = 0.P(Y = 0|X) + 1.P(Y = 1|X) =P(Y = 1|X)

The conditional expected values are conditional probabilities

-

8/13/2019 logreg

4/26

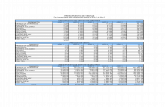

0 1 2 3 4 5 6 7 8 9 100.2

0

0.2

0.4

0.6

0.8

1

x

y

data cloud

Linear Fit

Sshaped fit

-

8/13/2019 logreg

5/26

2 Probit and Logit Regression

Binary regression model:

P(Y = 1|X) =F(0+ 1X1+ . . . pXp)

with

(a) F(u) = 1

1 + exp(u) Logit

(b) F(u) = (u), standard normal cumulative distribution function

Probit

(c) ...

0< F(u)< 1 and F increasing

-

8/13/2019 logreg

6/26

0 1 2 3 4 5 6 7 8 9 100.2

0

0.2

0.4

0.6

0.8

1

x

y

data cloud

Probit Logit

Difference is small;Probit function is steeper.

-

8/13/2019 logreg

7/26

-

8/13/2019 logreg

8/26

Prediction

For an observation xi= (xi1, . . . , xip) we predict the probability of

success as

P(Y = 1|X=xi) =F(0+ 1xi1+ . . .pxip).

Set yi= 1 if P(Y = 1|X=xi)>0.5 and zero otherwise.

(Other cut-off values than 0.5=50% are sometimes taken)

-

8/13/2019 logreg

9/26

3 Maximum Likelihood Estimation (MLE)

General principle: let L() be the likelihood or joint density of the

observations y1, . . . , yn, depending on an unknown parameter

L() =n

i=1

f(yi, )

(assumes independent observations)

Then the maximum likelihood estimator is the maximizing L():

=argmax

log L() =argmax

n

i=1

log f(yi, )

Denote Lmax =L().

-

8/13/2019 logreg

10/26

MLE for Bernoulli Variables

Let yi be the outcome of a 0/1 (failure/success) experiment, with p

be the probability of success. Then f(1, p) =p and f(0, p) = 1 p,

hence

f(yi, p) =pyi(1 p)1yi

The MLE p maximizes

n

i=1

{yilog(p) + (1 yi) log(1 p)}.

It is not difficult to check that p= 1

nn

i=1 yi, the percentage ofsuccesses in the sample.

-

8/13/2019 logreg

11/26

MLE for Probit Model

We will condition on the explanatory variables; hence keep them fixed.

f(yi, pi) =pyii (1 pi)

1yi with pi= (0+ 1Xi1+ . . . pXip)

The MLE = (0,1 . . . ,p) maximizes

n

i=1

{yilog (0+1Xi1+. . . pXip)+(1yi) log(1(0+1Xi1+. . . pXip))}.

The MLE needs to be computed using a numerical algorithm on thecomputer. (Similar for Logit model)

-

8/13/2019 logreg

12/26

If the model is correctly specified, then

1. MLE is consistent and asymptotically normal.

2. MLE is asymptotically the most precise estimator, hence

efficient.

3. Inference (testing, confidence intervals) can be done.

If the model is misspecified, then the MLE may loose the above

properties.

-

8/13/2019 logreg

13/26

-

8/13/2019 logreg

14/26

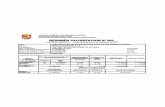

(1) We first regress deny on a constant, black and pi rat. In

Eviews, we specify within the equation specification, Estimation

Settings: Method: BINARY-Binary Choice and select logit.

-

8/13/2019 logreg

15/26

Both explanatory variables are highly significant. They have a

positive effect on the probability of deny, as expected. They are also

jointly highly significant (LR stat =152, P

-

8/13/2019 logreg

16/26

Predictive accuracy: (Expectation-prediction table)

88% is correctly classified, with a sensitivity of only 4.2% and a

specificity of 99.7%. The gain is only 0.25 percentage points w.r.t. a

majority forecast (i.e. all applications are accepted).

(2) Repeat the analysis, now with all predictor variables.

-

8/13/2019 logreg

17/26

5 Measures of Fit

Pseudo R-squared

Compare the value of the likelihood of the full model with an empty

model:

M(full): P(Y = 1|X) =F(0+ 1X1+ . . . + pXp)

M(empty): P(Y = 1|X) =F(0)

Pseudo R-squared=1 log Lmax(Full)

log Lmax(Empty)

(also called McFadden R-squared)

-

8/13/2019 logreg

18/26

Likelihood ratio test

The Likelihood Ratio (LR) statistic for H0 :1 =. . .= p = 0 is

LR= 2{log Lmax(Full) log Lmax(Empty)}

We reject H0 for large values of LR.

The LR statistics can be used to compare any pair of two nested

model. Suppose that M1 is a submodel of M2, and we want to test

H0 :M1 =M2. Then, under H0:

LR= 2{log Lmax(M2) log Lmax(M1)} d 2k,

where k is the number of restrictions (i.e. the difference in number of

parameters between M2 and M1).

-

8/13/2019 logreg

19/26

In practice, we work with the P-value. For example, if k= 4 and

LR= 7.8

0 5 10 150

0.02

0.04

0.06

0.08

0.1

0.12

0.14

0.16

0.18

0.2density of chisquared distribution with 4 degrees of freedom

Pvalue=0.092

LR=7.8

-

8/13/2019 logreg

20/26

Percentage correctly predicted

This is a measure of predictive accuracy, and defined as

1

n

n

i=1

I(yi= y

i)

This is an estimate of the error rate of the prediction rule.

[This estimate is over optimistic, since based on the estimation

sample. It is better to compute it using an out-of-sample prediction.]

-

8/13/2019 logreg

21/26

6 Other Limited Dependent Variable

Models

Censored regression models

Examples: Car expenditures, Income of Females, ... The value zero

will often be observed.

Mixture of a discrete (at 0) and continuous variable (Tobit models)

Truncated regression models

Data above of below a certain threshold are unobserved or censored.

These data are not available. We have a selected sample.

-

8/13/2019 logreg

22/26

Count data

Examples: number of strikes in a firm, number of car accidents,

number of children

Poisson type models

Multiple choice data

Examples: mode of transport

Multinomial logit/probit

Ordered response data

Examples: educational level, credit ratings (B/A/AA/...)

Ordered probit

-

8/13/2019 logreg

23/26

7 Exercise

We will analyse the data in the file grade.wf1. We have a sample

of students and we want to study the effect of the introduction of a

new teaching method, called PSI. The dependent variable is GRADE,

indicating whether students grade improved or not after the

introduction of the new method. The explanatory variables are

PSI: a binary variable indicating whether the student was

exposed to the new teaching method or not.

TUCE: the score on a pretest that indicates entering knowledge

of the material to be taught.

We will now run a LOGIT-regression of GRADE on a constant, PSI

and TUCE.

-

8/13/2019 logreg

24/26

-

8/13/2019 logreg

25/26

1. Why do we add TUCE to the regression model, if we are only interest in the

effect of PSI?

2. Interpret the estimated regression coefficients.

3. Take a student with TUCE=20. (a) Estimate the probability that he will

increase his grade if he follows the PSI-method. (b) What is this probability

to increase his grade if he will not follow this PSI-method. (c) Will this

student improve his grade, if PSI=1? (d) Compute the log odds-ratio (for

improving the grade or not) for this student once for PSI=1 and once for

PSI=0. Compute the difference between these two log-odds ratios. Compare

with the regression coefficient of PSI.

4. Compute the percentage of correctly classified observations and comment

(you can use View/Expectation-Prediction table).

5. The output shows the value LR statistic? How is this value computed?

6. Run now a PROBIT regression. Is there much difference between the

estimates? And for the percentage of correctly classified observations?

-

8/13/2019 logreg

26/26

References:

Greene, W.H., Econometric Analysis, 5th edition (2003) Prentice

Hall.

Stock, J.H., Watson, M.W., Introduction to Econometric, 2nd

edition (2007) Pearson.